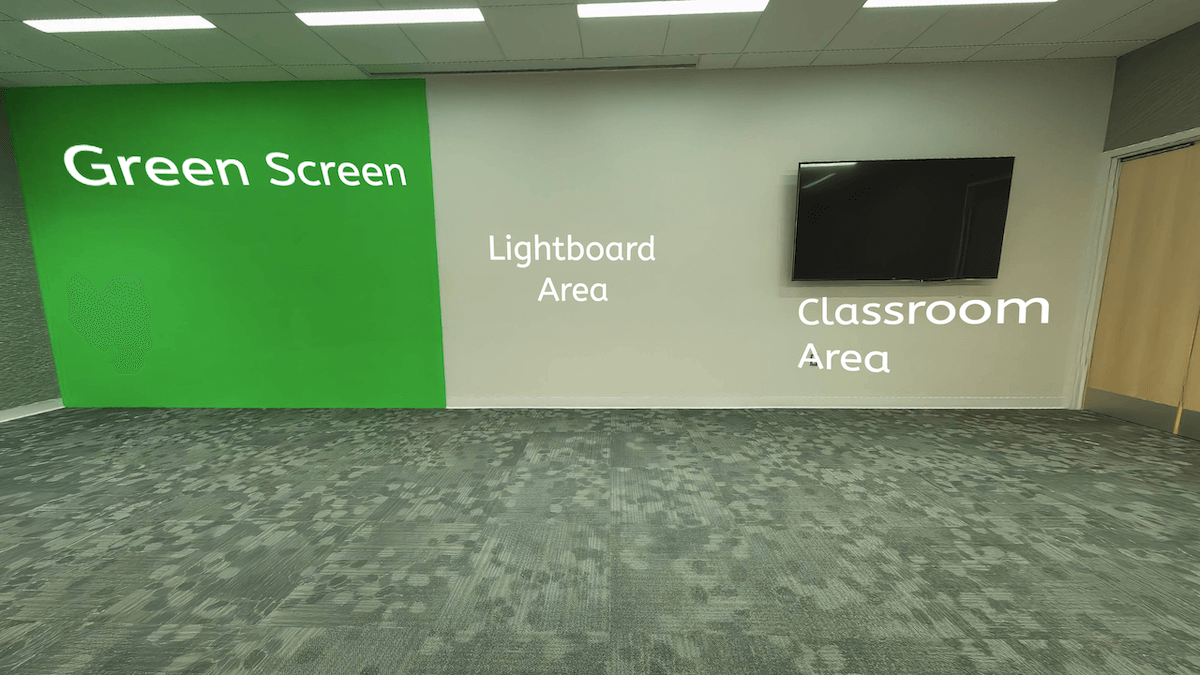

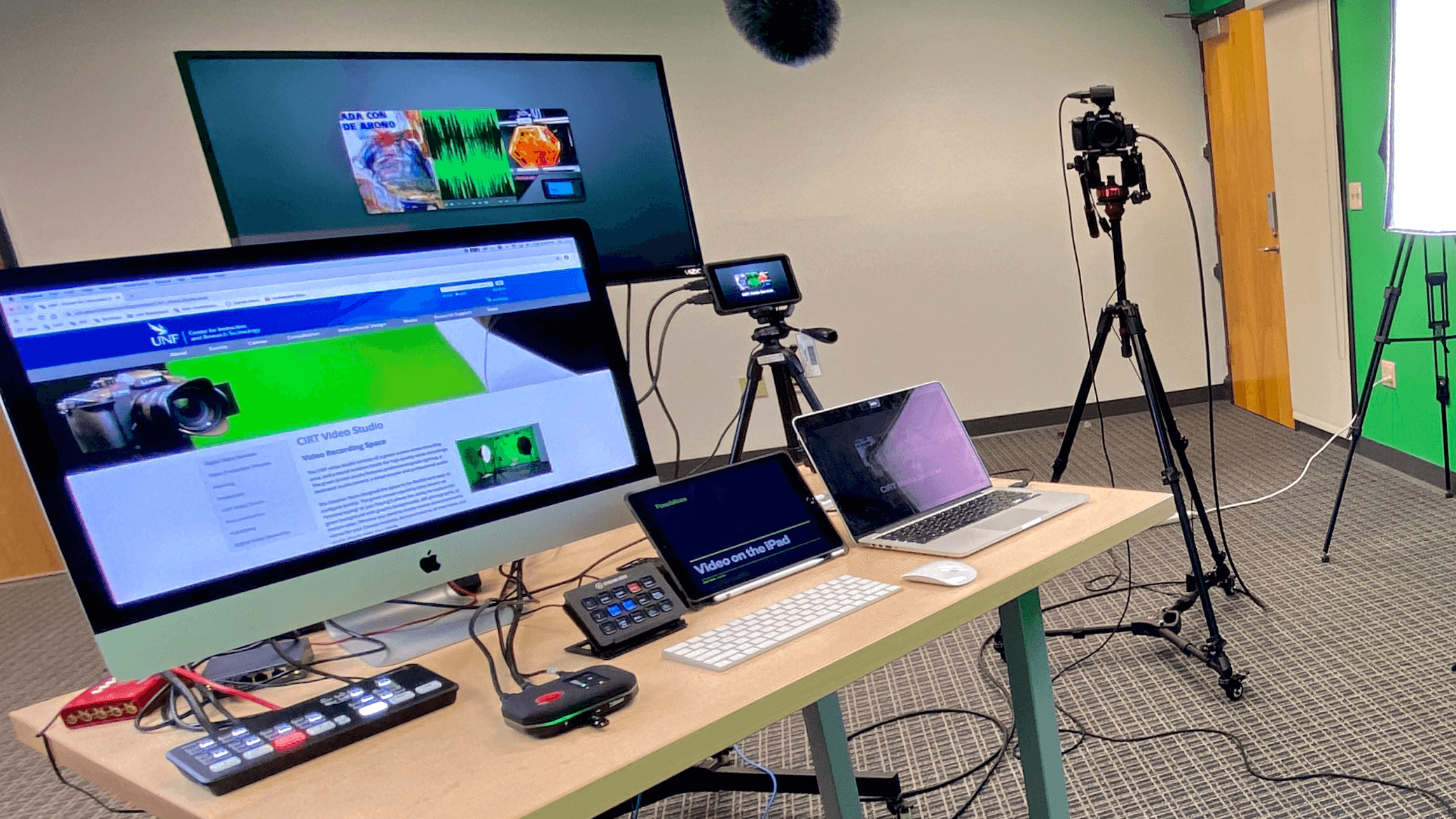

YANS – Yet Another New Studio

Lucky 7. We have just completed the renovation of a new space, that will soon be the New CIRT Studio. (Make sure you scroll to the bottom of this post to see the current view

Lucky 7. We have just completed the renovation of a new space, that will soon be the New CIRT Studio. (Make sure you scroll to the bottom of this post to see the current view

I screwed up a faculty recording yesterday. As one of my colleagues said, “it couldn’t have happened to a nicer person.” I couldn’t agree more. I won’t “out” the faculty member, yet, but they were

Let me be frank (even though my name is Andy). I do hate looking at my blog and seeing my most recent post was almost a year ago. And then I remember that since then

UPDATE 2021: This landed at the top of my blog because of an edit I made in Mars Edit – blog editing software from Red Sweater Software. It was originally published in May of 2005.

4 Years and 7 blog posts ago, I wrote about getting my FAA issued UAS (Unmanned Aircraft System) Remote Pilot’s license. I also mentioned some “trajectories have been altered”. There is never a promise in

“Hopefully, Andy will blog…” was the command that Jim Groom gave as he wrote about the Reclaim Today episode that I joined with both Jim, and Tim Owens, the founders of Reclaim Hosting. Of course,

A blog post. No apologies for time elapsed since the last one. No promises of when the next one will come. However, this post ushers in an exciting (to me) new tool to refresh the

There are many good video essay channels out there, but one that was particularly instructive to me was Every Frame a Painting. Sadly, they will cease to produce content. A tip of the hat to

It’s been so long, I don’t know the exact date. However, today being the last day in October, I can say unequivocally that I have been an Instructional Technologist now for 25 years!

One week ago (5-6-17) I had a dilemma. I woke up to a warm and hazy morning, but it wasn’t hazy because of humidity. It was the haze of smoke – from the West Mims